Circle Research in collaboration with Blockchain at Berkeley releases an open-source web app for interpreting and executing user intents with LLMs. Learn more.

TL;DR: Large Language Models (LLMs) can be used to decipher complex user intents from simple written text to execute transactions on the blockchain. TXT2TXN stands for text-to-transaction and uses an LLM to parse freeform English text, classify it as a type of action, and convert it into a signed intent to be executed on-chain.

Highlights:

- Circle Research releases TXT2TXN: an open-source web app for pairing user intents with LLMs to facilitate transactions on the blockchain.

- An intent refers to a user's expression of their desired outcome without specifying the exact steps the app should take to achieve it.

- TXT2TXN provides a glimpse into the future of crypto application UX made possible by LLMs.

Introduction

Blockchain at Berkeley and Circle Research recently collaborated on a project exploring the combination of intents and AI. In this article, we’ll cover a background on intents, how LLMs can be usefully paired with intent-based architecture, and an open-source prototype to provide a starting point for the future of crypto application UX.

Background

When we initially started this collaborative project, our main goals were to 1) better understand the landscape of intents research and work, and 2) find an angle where we could contribute. But first: what do we mean by intents?

Stated simply, “intents” refers to a user's expression of their desired outcome without specifying the exact steps the app should take to achieve it. Here are some additional definitions from across the industry:

- “Informally, an intent is assigned a set of declarative constraints which allow a user to outsource transaction creation to a third party without relinquishing full control to the transacting party.” - Paradigm

- “An intent is an expression of an individual’s desired end state(s). Whenever a person needs something, they subconsciously generate a virtual intent in their head. This can be anything, from wanting fish, coffee, a pizza, or even an NFT.” - Anoma

- “An "intent" generally refers to a predefined action or set of actions that a user or system wishes to execute. It represents the user's desired outcome within the blockchain environment. Intents are used to simplify and abstract complex interactions, making it easier for users to specify what they want to achieve without worrying about the underlying technical details. For example, in decentralized finance (DeFi), an intent might be to swap one cryptocurrency for another at the best available rate. The blockchain system then interprets this intent and executes the necessary transactions to fulfill it, interacting with various smart contracts and liquidity pools as needed.” - ChatGPT-4

You get the idea. Most existing blockchain applications operate on a transaction-based architecture, where the user-submitted transaction specifies the “how.” In an intent-based architecture, the user is just specifying the “what” without the “how.” The application is in charge of understanding and sometimes creating the exact steps that lead the desired outcome.

Eventually, an intent must be executed on-chain via a transaction. The parties responsible for executing intents on-chain are typically referred to as “solvers.” The infrastructure and solution can vary depending on type of intent; for instance, there are several protocols already live that are able to solve for user intents in a specialized context. Intent protocols for swapping tokens include:

- 0x protocol is able to settle signed limit orders (“I am willing to trade 1 unit of token X for 2 units of token Y”)

- UniswapX also offers on-chain settlement of signed orders with the added ability to specify a price decay function such that the sale resembles a Dutch Auction

- CoW Protocol settles off-chain orders in batches, helping to realize coincidence of wants (CoW) between swappers.

There are also protocols that are working towards general-purpose architectures. Anoma is one example, which provides infra (including an intent gossip layer) for any intent-based application. We’ve found that solutions that serve general-purpose intents, as opposed to specialized contexts, are both earlier in development as well as more complex.

LLMs In an Intent-Based Architecture

In an intent-based architecture, the task of the UI is simplified in that its main task is to determine the user’s desired action and transform that desire into a format that is ingestible by solvers.

For example, in a transaction-based model, a trading application may use Uniswap pools to execute a token swap. This requires determining the Uniswap pool(s) to route the trade. Another approach would be to aggregate the liquidity of several protocols, which requires gathering even more state and understanding the interfaces of many different protocols.

In an intent-based architecture, the application would only need to parse the user’s desired trade and pass that along to solvers. The solver may determine that using a Uniswap pool is optimal, or they may choose to satisfy the swap with their own token balance.

The task of the application is simplified to just determining the meaning of user input–a task to which LLMs are well-suited. With the increasing feasibility and accuracy of LLMs and their ability to reliably classify diverse inputs, integrating them into the intent workflow is a logical step. Instead of exposing all possible outcomes and levers via various UI elements, the application can let the user describe their desired outcome in natural language. The LLM then translates this expressed desire into a format that solvers can understand and then execute.

The Prototype

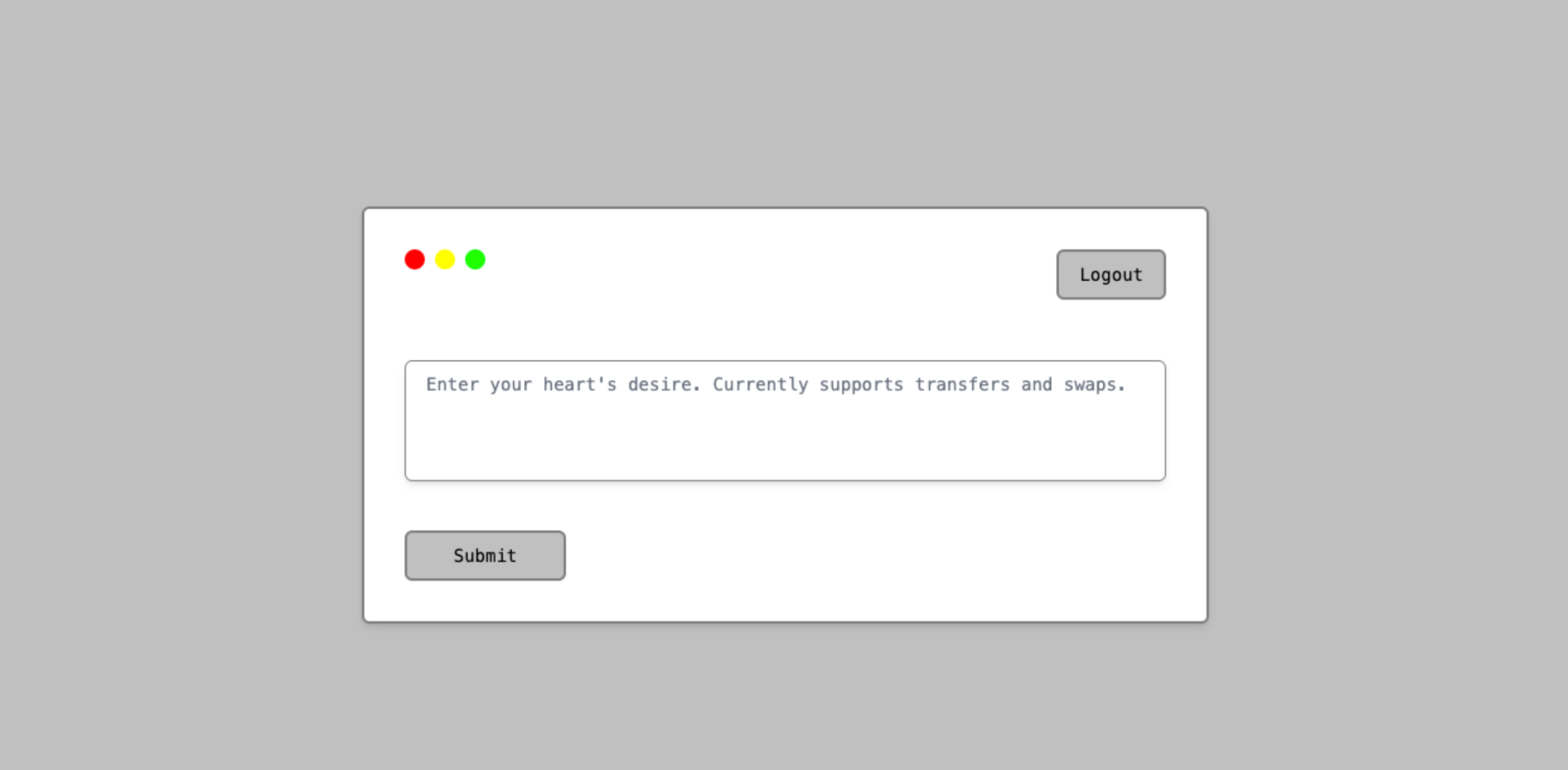

With TXT2TXN, we set out to implement a simple application that leverages an LLM to allow a user to flexibly communicate their intent.

To emphasize flexibility (and, let’s be honest, to minimize our frontend work), we made the frontend a simple textbox. In the textbox, the user can specify their desired action, for instance, “send 1 USDC on Ethereum to kaili.eth.”

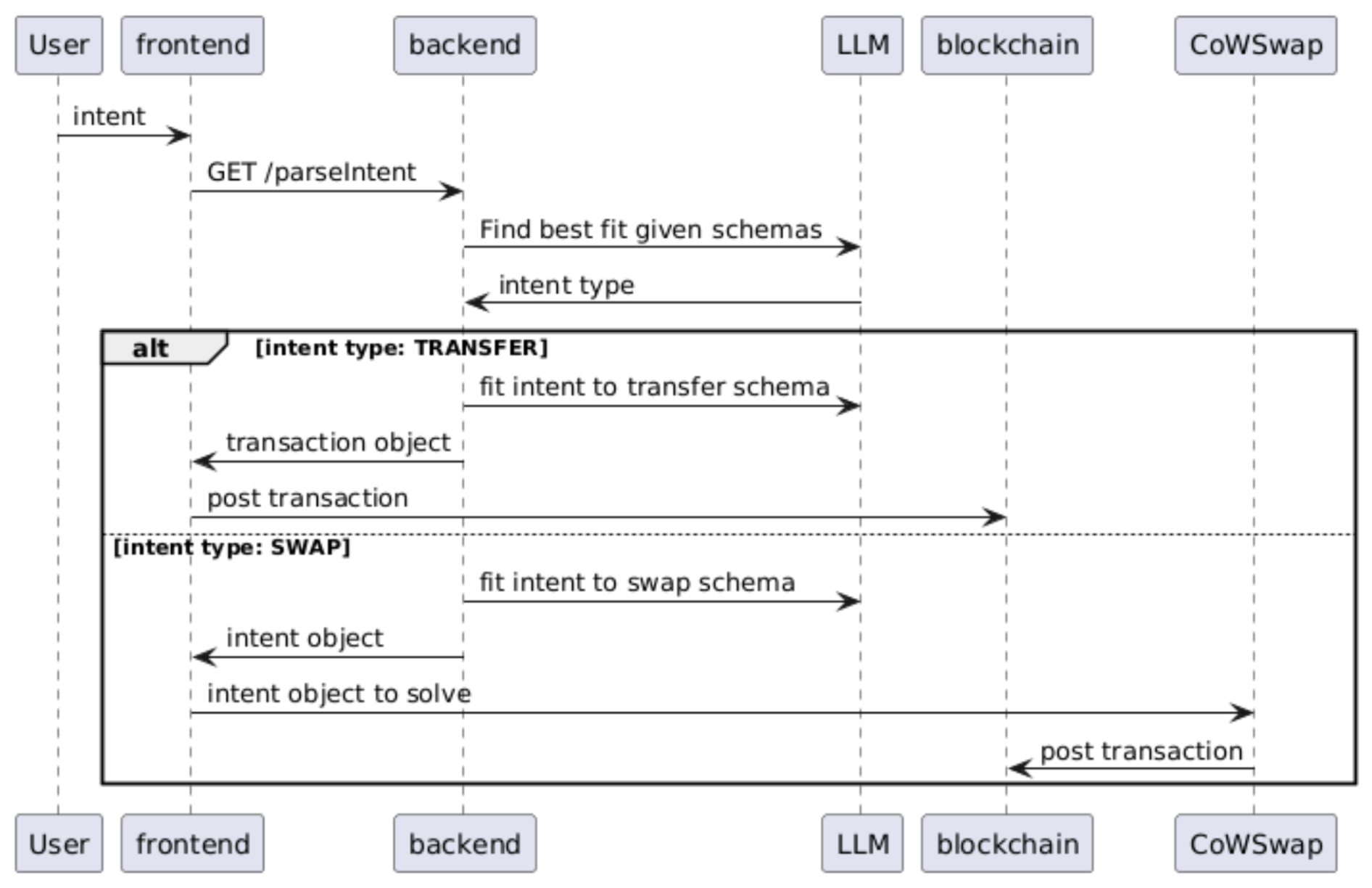

The backend utilizes OpenAI’s GPT-3.5 Turbo to interpret the intent in two stages.

First, the application prompts the LLM to classify the user input into a particular intent type given an array of possible schemas. To simplify the problem, the app currently supports transfers and swaps. The provided outcomes in the prompt either transfer, swap, or “no match.”

# Load the JSON schemas describing properties for swap and simple transfer

swap_schema = load_schema("schemas/swap.json")

transfer_schema = load_schema("schemas/transfer.json")

# Initialize OpenAI client

client = create_open_ai_client()

def classify_transaction(transaction_text):

# System message explaining the task

system_message = {

"role": "system",

"content": "Determine if the following transaction text is for a token swap or a transfer. Use the appropriate schema to understand the transaction. Return '1' for transfer, '2' for swap, and '0' for neither. Do not output anything besides this number. If one number is classified for the output, make sure to omit the other two in your generated response."

}

# Messages to set up schema contexts

swap_schema_message = {

"role": "system",

"content": "[Swap Schema] Token Swap Schema:\n" + json.dumps(swap_schema, indent=2)

}

transfer_schema_message = {

"role": "system",

"content": "[Transfer Schema] Simple Transfer/Send Schema:\n" + json.dumps(transfer_schema, indent=2)

}

# User message with the transaction text

user_message = {"role": "user", "content": transaction_text}

# Sending the prompt to ChatGPT

completion = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

system_message,

swap_schema_message,

transfer_schema_message,

user_message,

]

)

# Extracting and interpreting the last message from the completion

response = extract_response(completion)

return get_valid_response(response) # ensure response is either 0, 1, or 2If the first stage identifies the user input as either a swap or transfer, we move on to the second stage: filling the schema. TXT2TXN prompts the LLM again to fill in a schema that corresponds to the desired intent type and will be able to be transformed into a signed CowSwap order in the case of a swap or a transaction payload in the case of a transfer.

For a swap (the pattern is similar for transfer):

# System message explaining the task and giving hints for each schema

system_message = {

"role": "system",

"content": "Please analyze the following transaction text and fill out the JSON schema based on the provided details. All prices are assumed to be in USD."

}

# Messages to set up Schema

swap_schema_message = {

"role": "system",

"content": "Token Swap Schema:\n" + json.dumps(swap_schema, indent=2),

}

instructions_schema_message = {

"role": "system",

"content": "The outputted JSON should be an instance of the schema. It is not necessary to include the parameters/contraints that are not directly related to the data provided.",

}

# User message with the transaction text

user_message = {"role": "user", "content": user_input}

# Sending the prompt to ChatGPT

completion = client.chat.completions.create(

model="gpt-3.5-turbo",

response_format={"type": "json_object"},

messages=[

system_message,

swap_schema_message,

instructions_schema_message,

user_message,

],

)The frontend receives the intent type and prompts the user to sign an object that will fulfill their intent.

This architecture is illustrated in the diagram below.

Accuracy of LLMs

One of the primary concerns with using an LLM for a crypto frontend is accuracy–whether or not the LLM correctly interprets the user’s intent.

When funds are being moved irreversibly, as they are in most operations performed on public blockchains, the acceptable allowance for mistakes must be very low.

To get a very basic understanding of the LLM’s accuracy in categorizing various prompts, we fed a small array of prompts of varying semantics, e.g. “swap 1 DAI for USDC on sepolia” and “transfer 1 USDC to kaili.eth on sepolia,” that we manually labeled with the correct intent schema.

We used these 20 examples to test the model, and the results were promising. Note, however, that these tests are not deterministic. These tests are a very cursory look into accuracy, and so this topic deserves a lot more research–see the section on future work.

Future Work

This simple prototype is only the beginning of what a crypto application can look like with the help of LLMs and intent-based architecture. There are several possible extensions we’d encourage developers to play around with, such as:

- In cases where the LLM doesn’t understand your intent, the LLM will respond with clarifying questions.

- Allow users to select and execute intents directly in text conversations, e.g. “You owe me $5” => signed intent.

- Support for a wider array of intent types such as limit orders, NFT purchases, and lending.

- Stateful features such as integration with one’s personal address book (LLM would resolve “Mom” to her on-chain address).

- More robust accuracy tests of the LLM, and improve accuracy through techniques such as few shot learning.

- A visual representation of the intent to confirm the action with the user before signing.

Of course, these ideas lead to a number of fascinating questions to explore, which could each be involved research areas alone:

- How would legal responsibility and obligations fall in a scenario where the LLM may misinterpret a user’s intent?

- This prototype tries to provide a generalized UX for multiple types of intents, but only through stitching together different services for different intent types. Will the most optimal long-term version be similar where each intent type has to be manually added, or will we be able to achieve a more generalized solving backend?

- Using remote LLMs presents privacy concerns, because what the user is now submitting isn’t exactly identical to the on-chain data that would be publicly available. In other words, knowing what the user wants can give away more information than what the user ends up actually executing. As a metaphor, you could tell your AI assistant “order the ingredients for a birthday cake,” which gives different information than knowing your grocery order alone. This would be alleviated by using an LLM instance on the user’s own device.

The Code

If you’re interested in reviewing or forking the code, check out our repositories for TXT2TXN:

Frontend: https://github.com/circlefin/txt2txn-web

Backend: https://github.com/circlefin/txt2txn-service

Note that this application was built for research purposes only–it is not meant to be used in production.

Acknowledgements

This project was a collaboration between team members of Blockchain at Berkeley (Niall Mandal, Teo Honda-Scully, Daniel Gushchyan, Naman Kapasi, Tanay Appannagari, Adrian Kwan) and Circle Research (Alex Kroeger, Kaili Wang).

If your team is interested in collaboration with Circle Research on this topic or others, please reach out here.